According to Taiwan’s Economic Daily News, NVIDIA plans to launch the next-generation GB300 AI server product line at the GTC conference in March next year.

Recently, Foxconn and Quanta have proactively started the research and development of GB300 to seize the opportunity early. It is understood that NVIDIA has preliminarily determined the GB300 order configuration, with Foxconn remaining its largest supplier. The GB300 is expected to hit the market in the first half of next year, ahead of global competitors. Industry sources reveal that Quanta and Inventec are also key partners for NVIDIA’s GB300 AI servers. Quanta ranks second to Foxconn in order share, while Inventec has significantly increased its order share compared to the GB200, positioning them to capitalize on the next-generation GB300 opportunities.

GPU: B200 → B300

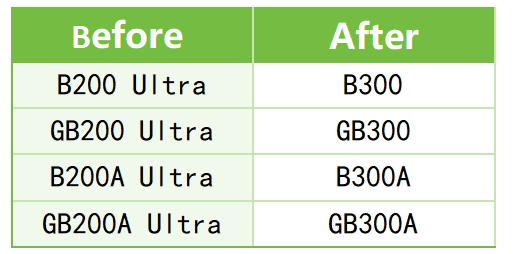

In October this year, NVIDIA rebranded all Blackwell Ultra products to the B300 series, which uses CoWoS-L technology, driving demand for advanced packaging solutions.

Performance Boost

The new B300 GPU offers a 1.5-fold increase in floating-point computation performance (FP4) over the previous B200.

TDP Heat Power

The B300 GPU’s power consumption can reach up to 1400W, compared to approximately 1000W for the B200, marking a significant leap. To maintain this substantial power, both the power supply and cooling systems need to keep up.

Upgraded Liquid Cooling System

Liquid Cooling Plate + Enhanced UQD Quick-Change Connector: The 1400W power consumption makes air cooling insufficient. Therefore, the GB300 uses liquid cooling plates and upgrades the UQD quick-change connectors for improved efficiency and reliability.

New Cabinet Design: The cabinet layout, pipeline design, and cooling channels have been redesigned to accommodate increased numbers of water cooling plates, liquid cooling systems, and UQD quick-connect components.

Significant Upgrade to HBM3e Memory

192 GB → 288 GB: Remember the 192 GB HBM3 memory in the GB200 era? Now, each B300 GPU boasts a staggering 288 GB of HBM3e! This substantial increase is essentially a green light for extensive model training, making it highly attractive for large models with hundreds of billions of parameters.

Stacking 8 Layers → 12 Layers: Compared to the previous 8-layer stack, the new configuration uses a 12-layer stack—not only increasing capacity but also significantly enhancing bandwidth. This high parallelism allows data to flow smoothly without bottlenecks.

Network and Transmission

Network Card: ConnectX 7 → ConnectX 8: The GB300 has upgraded from the ConnectX 7 network card to the ConnectX 8. This upgrade brings comprehensive improvements in bandwidth, latency, and reliability, ensuring seamless data transfer in large-scale clusters.

Optical Modules: 800G → 1.6T: The upgrade from 800G to 1.6T is comparable to shifting from second to fourth gear. For scenarios involving massive data interactions, such as HPC and AI training, this bandwidth increase is a lifesaver.

Power Management and Reliability

New Additions: Standardized Capacitor Tray & BBU: The GB300 NVL72 cabinet now features a standardized capacitor tray with an optional Battery Backup Unit (BBU) system. Each BBU module costs around $300, and the entire GB300 system requires about 5 BBU modules, totaling approximately $1500. While this may seem costly, it is a crucial investment to avoid sudden power outages in high-load, high-power AI environments.

High Demand for Supercapacitors: Each NVL72 rack requires over 300 supercapacitors to handle instantaneous current surges and protect the system. Priced at $20-25 each, this represents a significant expense but is necessary for the power-hungry GB300.

Major Memory Revolution

LPCAMM Steps onto the Server Stage: For the first time, NVIDIA has introduced the LPCAMM (Low Power CAMM) standard to server computing boards. Previously seen in lightweight laptops, this “little guy” is now taking on the high-load demands of servers, proving its exceptional capabilities. The introduction of LPCAMM in servers suggests a trend towards making them more “slim and sleek,” hinting at a possible shift towards a more fashionable approach in server design.

Replacing Traditional DIMMs?: LPCAMM offers a more compact, energy-efficient, and easily maintainable solution. It might completely replace traditional RDIMM and LRDIMM in the future, potentially causing a significant shake-up in the server memory market. If LPCAMM does replace traditional DIMMs, it could herald a major transformation in the server memory landscape.

The NVIDIA GB300 “Blackwell Ultra” is set to elevate the AI computing power ceiling significantly. The enhancements in GPU cores, the massive HBM3e memory support, and the comprehensive upgrades in cooling and power management all indicate that large models and large-scale computing are the unstoppable trends of the future. Additionally, with the inclusion of LPCAMM and 1.6T network bandwidth, the efficiency of cloud data centers and supercomputing centers will be further enhanced.

It is clear that the “arms race” for AI computing power is only just beginning. Those who take the lead in hardware and software ecosystems may well dominate the next wave of the AI revolution.