Table of Contents

ToggleName Origin

G – Grace CPU

B – Blackwell GPU

200 – Generation

NVL – NVlink Interconnect Technology

72 – 72 GPUs

Computing Power Configuration

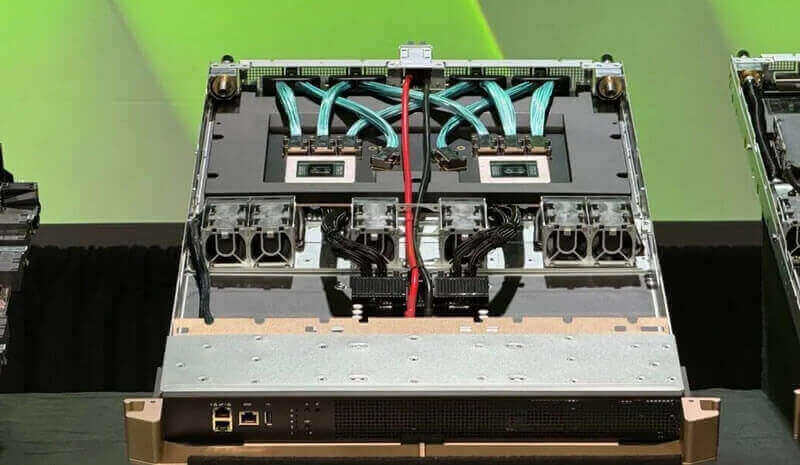

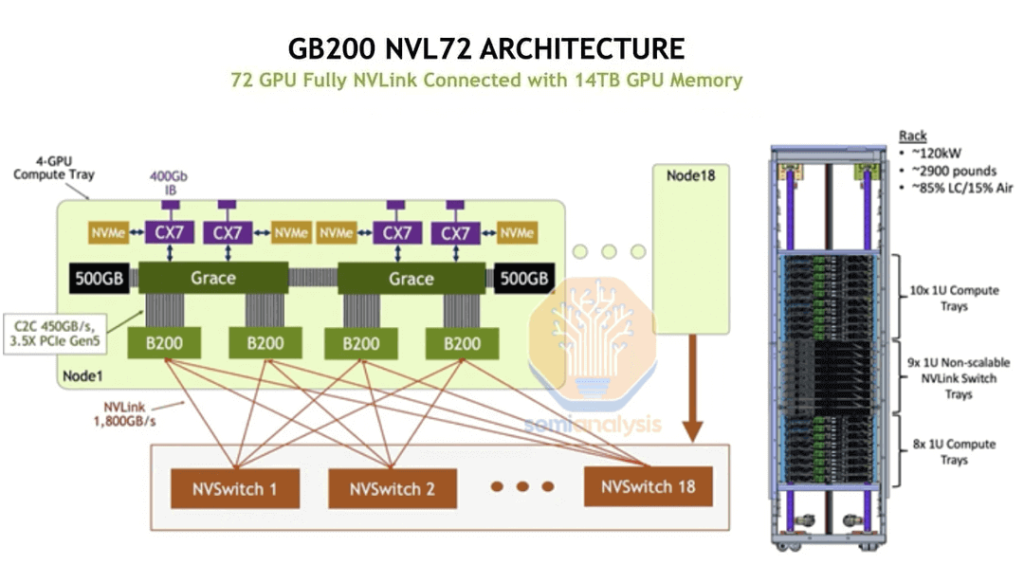

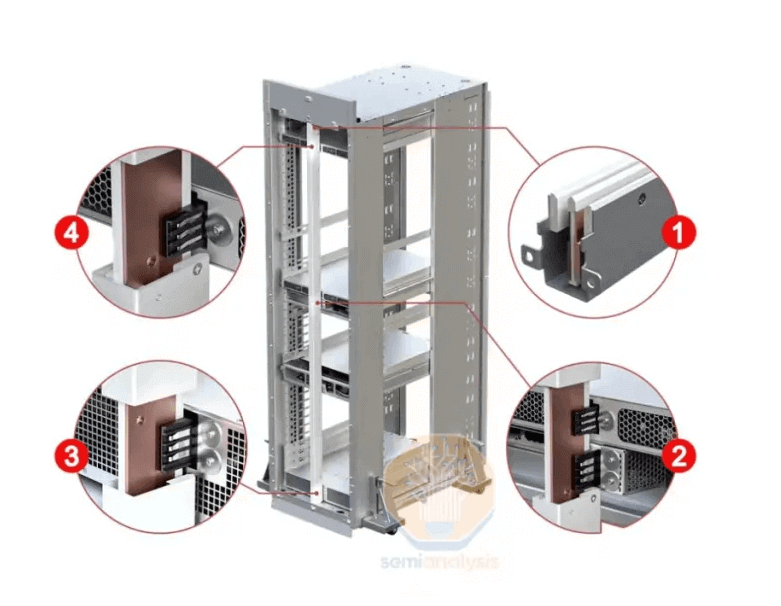

Each NVL72 has 18 compute trays, which are the basic units of the NVL72’s computing power configuration. Each tray serves as a computing node. The GB200 NVL72 defines the Bianca board’s super chipset, where each chipset comprises one Grace CPU (developed by NV based on ARM architecture) and two Blackwell GPU chips. Each compute tray consists of two super chipsets, i.e., 2 CPUs + 4 GPUs. The NVL72’s 18 compute trays total 18 * 4 = 72 GPUs.

Each compute tray, in a 1U form factor, directly plugs into the chassis. It is the minimal unit for daily deployment and maintenance, with each compute tray consuming up to 5400W of power.

The entire NVL72 system boasts 13.8T of video memory, with each B200 chip containing 192GB of video memory, an increase of 112GB over the H100’s 80GB. Additionally, the single GPU memory bandwidth has been upgraded from 3.35TB/s in the H100 to 8TB/s. The system also includes 17TB of DDR5X memory, with each Bianca chip equipped with 480GB of memory.

Cooling Configuration

During the H100 phase, each GPU consumed 700W. To meet air cooling requirements and create a better aerodynamic environment, the entire system fit into a 6-8U space with 8 H100s. In the B200 phase, each chip consumes 1200W, requiring more cooling space, thus expanding the system to a 10U size (8 * B200).

In the GB200 Bianca board scenario, with a power consumption of 2700W, air velocity is insufficient to provide effective cooling within a 19-inch rack, necessitating a liquid cooling solution. This allows the system’s volume to be controlled within the 1-2U range, significantly enhancing space utilization and cooling efficiency.

- At the Server Level: Liquid cooling can address the cooling needs of the CPUs and GPUs on the Bianca board through cold plates. However, the front part of each compute tray and NVswitch tray still contains many custom components, such as network cards, PDUs, management cards, and hard drives, which still require air cooling. Typically, the liquid-to-air ratio for a compute tray is about 8.5:1.5. In the future, if scaling out based on CX network cards, cold plates might be designed for NICs.

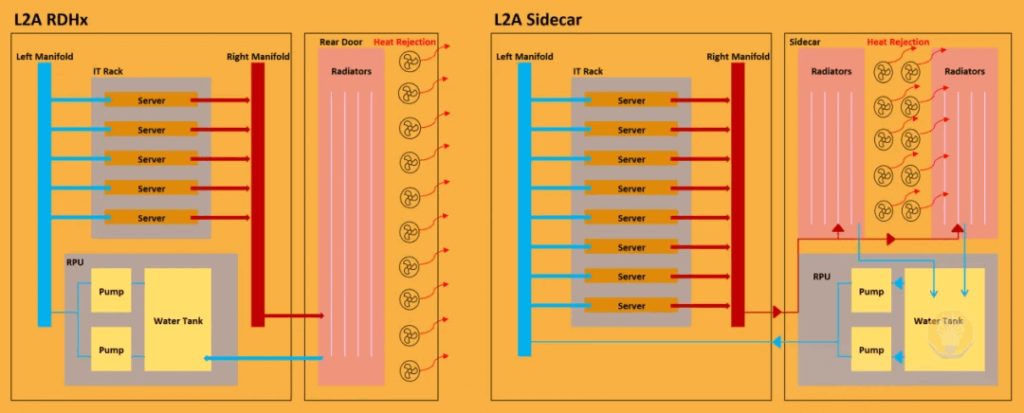

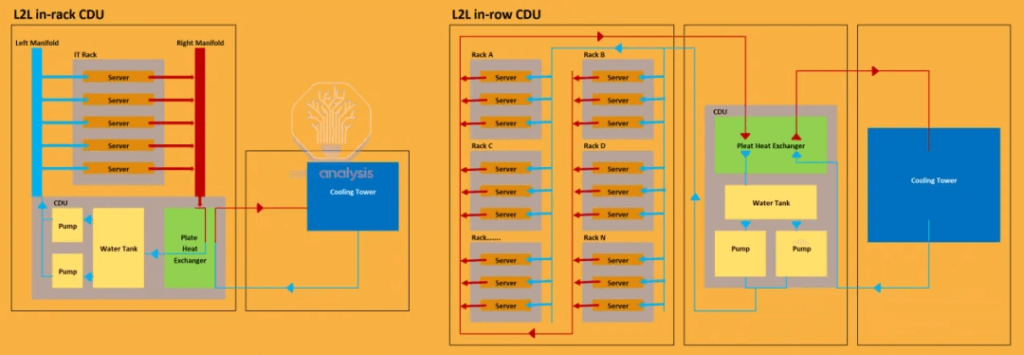

- At the Rack Level: Various liquid cooling solutions are currently available:

- Retrofit Solutions for Older Air-Cooled Rooms: There are two options, RDHx and Sidecar, with the former providing 30-40KW cooling and the latter offering 70-140KW cooling. These solutions allow for the addition of a liquid cooling system to each rack without altering the existing air-cooled HVAC units, using a refrigerant to transfer heat to a radiator for air exchange (retaining the indoor air cooling environment). Minimal modifications are required, avoiding extensive pipeline renovations.

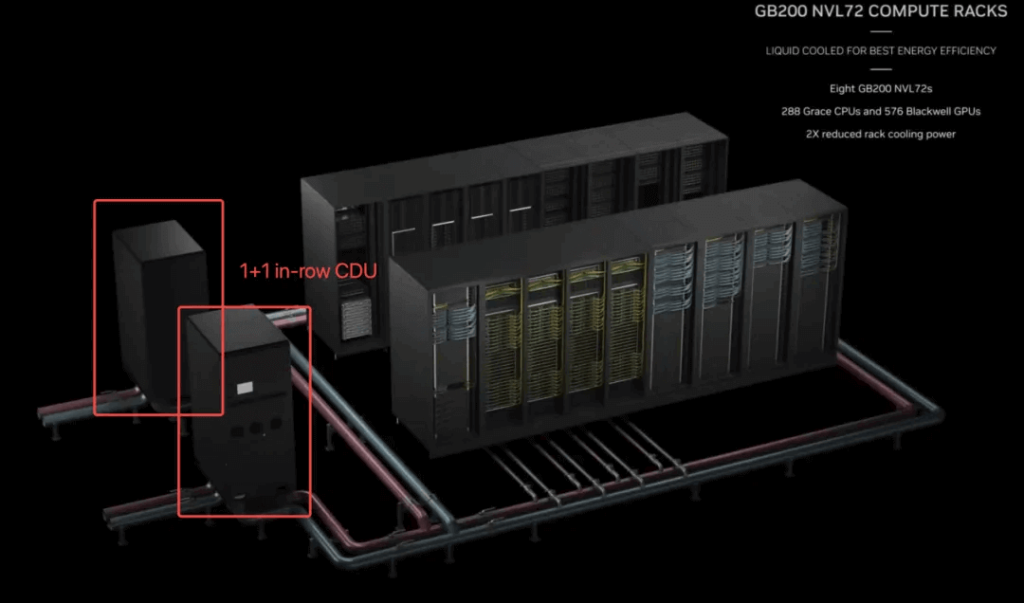

- New High-Density Data Centers: For new high-density data centers like NV72, in-rack CDUs and in-row CDUs are the primary options. In-rack CDUs require more than 4U space within the rack and typically offer around 80KW cooling efficiency without redundancy capabilities. In contrast, in-row CDUs are installed outside individual racks and provide cooling for several racks or rows, configured with two CDU systems, offering 800KW-2000KW cooling and redundancy. The NVL576 cluster’s official marketing uses the in-row solution.

Network Configuration

NVLink Network

The NVL72 features a fully interconnected NVLink architecture, eliminating the need for RDMA (IB&RoCE) networks in NVL72 mode.

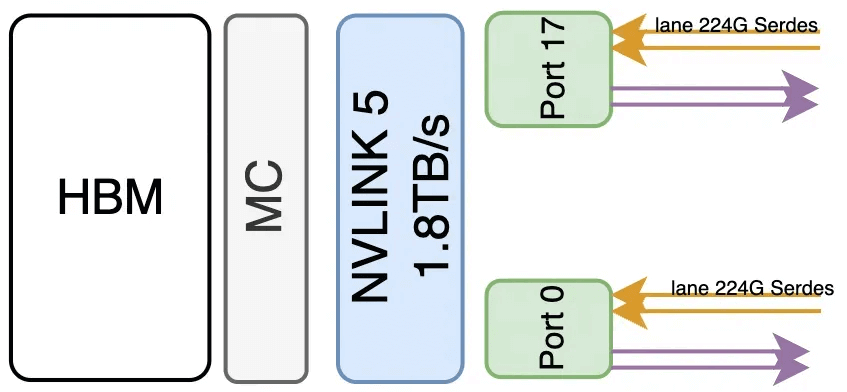

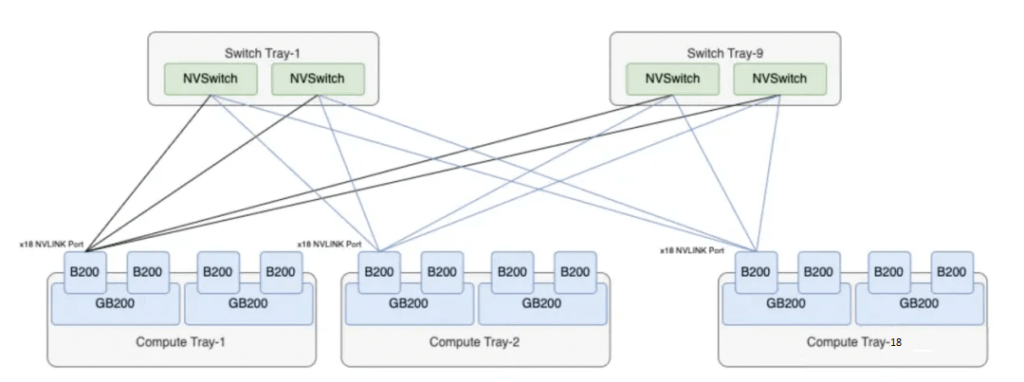

The NVL72 system is equipped with 9 NVSwitch trays, each containing 2 NVLink switch chips. Each chip supports a rate of 4 * 1.8TB/s, totaling 7.2TB/s capacity, which translates to 57.6Tbps. This capacity is slightly higher than the popular TH5 chip’s 51.2Tbps. Each NVSwitch tray provides an NVLink capacity of 2 * 4 * 1.8TB/s = 14.4TB/s.

The GB200 utilizes NVLink 5.0, with each B200 chip interconnected to NVLink switch chips via 18 NVLink 5.0 connections. Therefore, an NVL72 unit has 72 * 18 = 1296 NVLink 5.0 ports, with each port offering bidirectional 100GB/s, consisting of 4 pairs of differential signal lines. Each pair is connected by a copper cable, resulting in 1296 * 4 = 5184 physical connections.

As depicted, all 9 NVSwitch trays in a GB200 NVL72 cabinet are used to connect 72 B200 chips. Each B200 chip is linked to 18 NVSwitch chips via a single NVLink 5.0 bidirectional 100GB connection. Each NVSwitch chip supports 7.2GB bandwidth, corresponding to 72 NVLink 5.0 connections, thus accommodating the deployment of 72 B200 GPUs. There are no additional NVLink interfaces for expanding to larger clusters.

The NVLink network within the GB200 NVL72 forms a fully interconnected state, achieving full interconnectivity for 72 B200 chips via a single NVSwitch hop. Each switch chip has 4 NVLink ports, with each port paired with 72 copper cables, significantly reducing optical communication power consumption and cost, and saving up to 20KW per system. The internal communication structure of the NVL72 is illustrated in the following diagram:

Non-NVLink Network (RDMA + High-Speed TCP Network)

Each compute tray includes 4 OSFP slots and 2 QSFP slots. The network port layout on the compute tray’s front panel is shown below:

- The 2 QSFP slots, supported by Bluefield-3 DPU, provide 400G/800G ports for high-performance TCP/storage network interconnectivity, forming the front-end network proposed by NV.

b. The 4 OSFP slots, with CX7/CX8 800G/1.6TB ports, support the GB200’s external expansion using RDMA network communication, constituting the back-end network proposed by NV.

Due to design architecture, transmission costs, and chip capabilities, NV currently provides a pure NVLink networking solution for a maximum of 576 GPUs, equivalent to 8 GB200 NVL72 units. For further expansion of AI training/inference clusters, RDMA networks are required. NVLink 5.0 achieves a 100GB/s bandwidth per GPU, with 18 connections per GPU, totaling 1.8TB/s bandwidth. RDMA’s current fastest single port rate is 200GB/s (1.6Tbps), which does not match NVLink’s speed.

Power Configuration

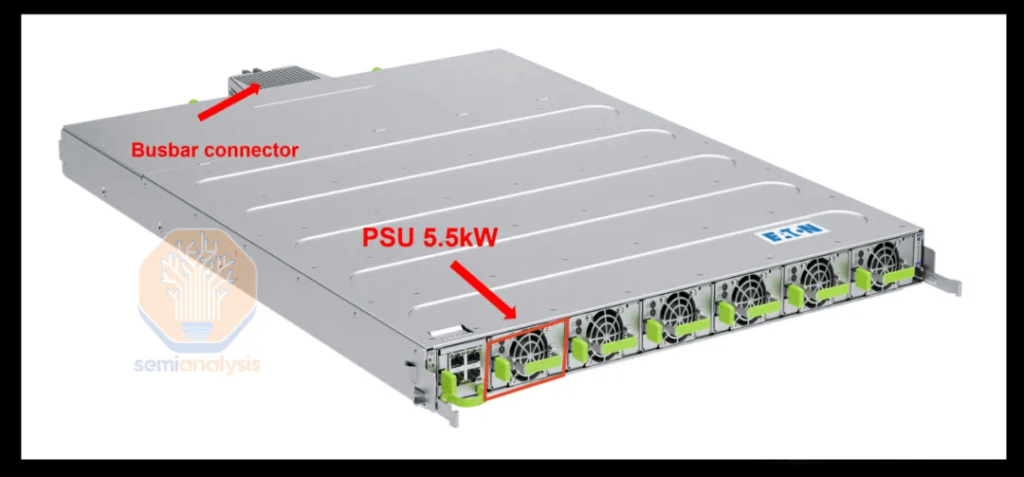

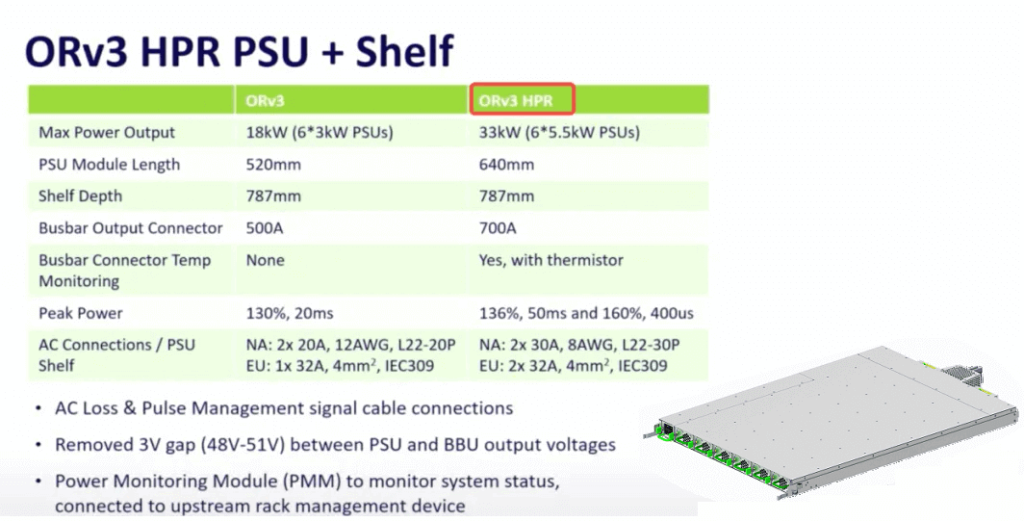

Overall Rated Power Consumption: The system has an overall rated power consumption of 120KW. It is configured as 2N with 4+4 (or 4+2) power shelves, each supporting 33KW. Each power shelf can accommodate six 5.5KW PSU units, providing 5+1 redundancy.

Power Shelf Specifications: The power shelves utilize OCP’s ORv3 HPR power shelf, boasting over 97.5% power efficiency, thus reducing power losses during the AC-DC conversion process. Additionally, it uses 48V/50V low-voltage DC output for each slot, which offers lower power transmission losses compared to the traditional 12V output.

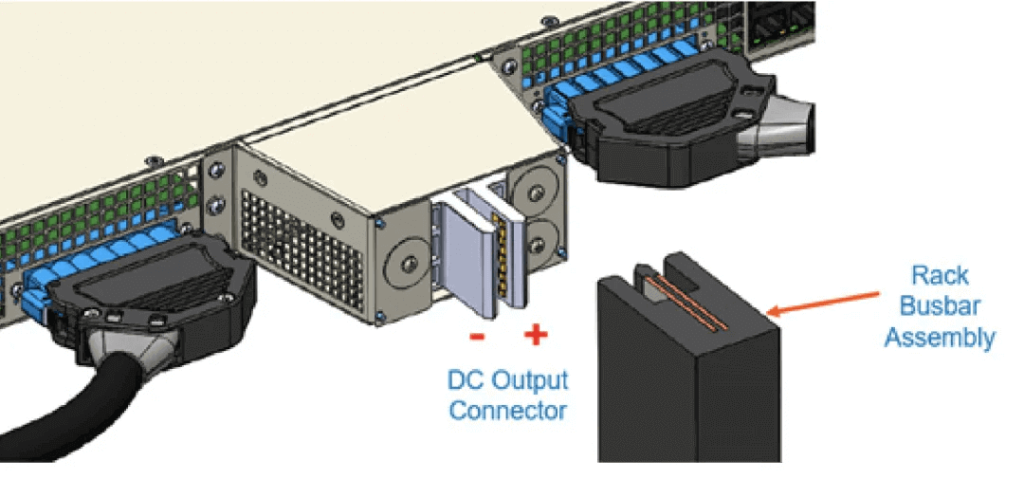

Rack Input Power Standard: The rack input power conforms to OCP’s ORv3 HPR standard, with an AC input of 415V. Each slot is directly connected to the rack’s busbar via hard connections.

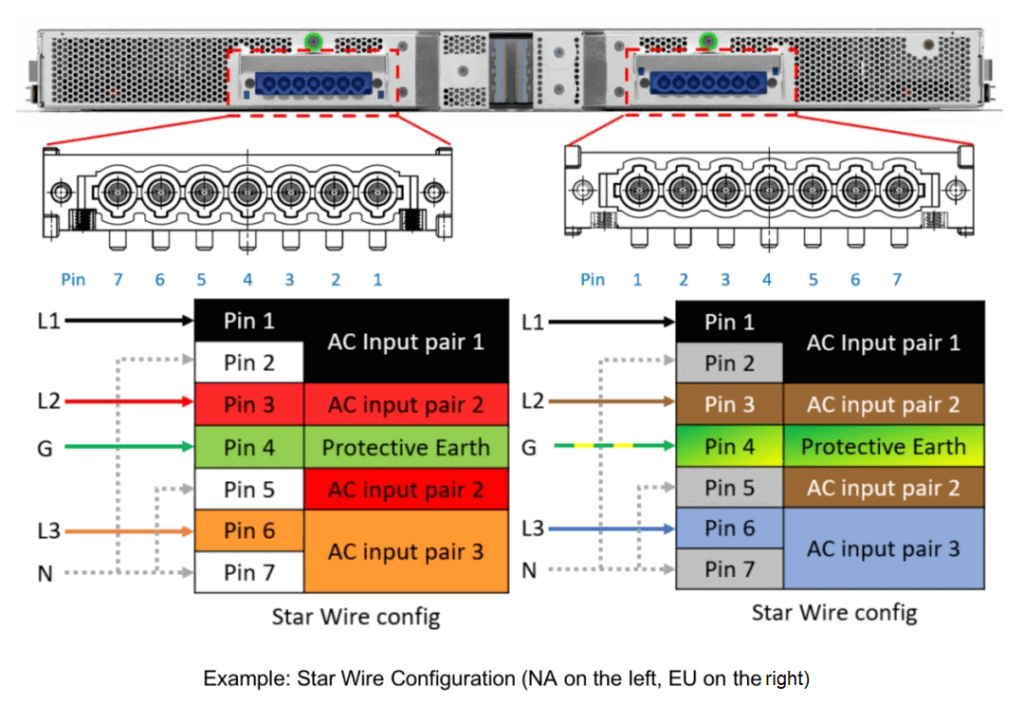

AC Input Configuration: On the AC input side, the system uses ORv3-defined 7-pin connectors. The diagram below shows two different connector standards (left for North America, right for Europe). Based on the power shelf’s 33KW support, each input likely adheres to a 125A breaker standard.

Upstream AC Input Connections: The upstream end of the AC input uses standard industrial connectors, compliant with IEC 60309-2 standards and rated IP67. These mobile industrial plugs support a 125A breaker. Depending on the phase voltage, you can choose between 3-pin 125A or 5-pin 125A configurations.

Related Products:

-

OSFP-800G-FR4 800G OSFP FR4 (200G per line) PAM4 CWDM Duplex LC 2km SMF Optical Transceiver Module

$3500.00

OSFP-800G-FR4 800G OSFP FR4 (200G per line) PAM4 CWDM Duplex LC 2km SMF Optical Transceiver Module

$3500.00

-

OSFP-800G-2FR2L 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Duplex LC SMF Optical Transceiver Module

$3000.00

OSFP-800G-2FR2L 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Duplex LC SMF Optical Transceiver Module

$3000.00

-

OSFP-800G-2FR2 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Dual CS SMF Optical Transceiver Module

$3000.00

OSFP-800G-2FR2 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Dual CS SMF Optical Transceiver Module

$3000.00

-

OSFP-800G-DR4 800G OSFP DR4 (200G per line) PAM4 1311nm MPO-12 500m SMF DDM Optical Transceiver Module

$3000.00

OSFP-800G-DR4 800G OSFP DR4 (200G per line) PAM4 1311nm MPO-12 500m SMF DDM Optical Transceiver Module

$3000.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

-

OSFP-XD-1.6T-4FR2 1.6T OSFP-XD 4xFR2 PAM4 1291/1311nm 2km SN SMF Optical Transceiver Module

$15000.00

OSFP-XD-1.6T-4FR2 1.6T OSFP-XD 4xFR2 PAM4 1291/1311nm 2km SN SMF Optical Transceiver Module

$15000.00

-

OSFP-XD-1.6T-2FR4 1.6T OSFP-XD 2xFR4 PAM4 2x CWDM4 2km Dual Duplex LC SMF Optical Transceiver Module

$20000.00

OSFP-XD-1.6T-2FR4 1.6T OSFP-XD 2xFR4 PAM4 2x CWDM4 2km Dual Duplex LC SMF Optical Transceiver Module

$20000.00

-

OSFP-XD-1.6T-DR8 1.6T OSFP-XD DR8 PAM4 1311nm 2km MPO-16 SMF Optical Transceiver Module

$12000.00

OSFP-XD-1.6T-DR8 1.6T OSFP-XD DR8 PAM4 1311nm 2km MPO-16 SMF Optical Transceiver Module

$12000.00