InfiniBand is a cable technology that enables fast data transfer. It is one of the most essential in current computing systems, requiring fast communication and efficiency. InfiniBand has a unique design provides high bandwidth and low latency, making it suitable for use in data centers, supercomputers, and high-performance computing clusters. This guide covers all these cables, including their parts, working principles, and benefits over conventional networking methods. By looking into its technical specifications alongside deployment scenarios, this article will help readers understand how InfiniBand can be used to meet the changing requirements of today’s digital world, where many applications deal with large amounts of information.

Table of Contents

ToggleWhat Is an InfiniBand Cable?

InfiniBand Technology Explained

InfiniBand technology is a high-speed interconnect architecture primarily used in supercomputing, data centers, and high-performance computing. Allowing simultaneous transmission of multiple data packets through point-to-point links significantly improves bandwidth efficiency. This technology ensures low latency connections necessary for real-time processing by supporting synchronous and asynchronous communication modes. In addition, Infiniband has a modular design that can create larger fabrics by connecting many nodes. With the capability of handling large amounts of data at high speeds, InfiniBand is increasingly seen as a better alternative than Ethernet or any other traditional network system, especially when dealing with applications requiring huge amounts of throughput coupled with low latencies.

InfiniBand vs Ethernet: Key Differences

InfiniBand and Ethernet are two networking technologies, but they serve distinct purposes and different environments, especially in performance and architecture. One main difference is bandwidth, where InfiniBand can achieve higher data transfer rates, often exceeding 200 Gbps. At the same time, in the current mainstream implementations of Ethernet, it reaches a maximum of 100 Gbps.

In addition, since it has been made for low-latency communication, Infiniband is excellent for real-time processing requiring applications such as HPCs (high-performance computing) or data-intensive tasks. However, although they have worked on reducing latency over time, Ethernet still generally incurs higher overhead, leading to greater latency during data transmission.

Another important difference is scalability, which is due to InfiniBand’s design. This design allows easy resource scaling within modular fabrics used by dynamic environments like data centers, although sometimes, more complex configurations might be needed to achieve similar scalability levels with ethernet networks.

Finally, reliable and unreliable transport options exist, supported by InfiniBand, which gives flexibility in managing their data during transmission. In contrast, in most cases, Ethernet follows a connection-oriented model that emphasizes reliability but introduces additional latency. Generally speaking, while being versatile and widely adopted technology, Ethernet remains a general-purpose network system, hence possessing some specialized features explicitly designed for meeting the needs of high-performance applications dealing with large amounts of information would be better served by InfiniBand.

Types of InfiniBand Cables

Data center environments rely on InfiniBand cables to establish high-speed connections. There are three types of these cables which are:

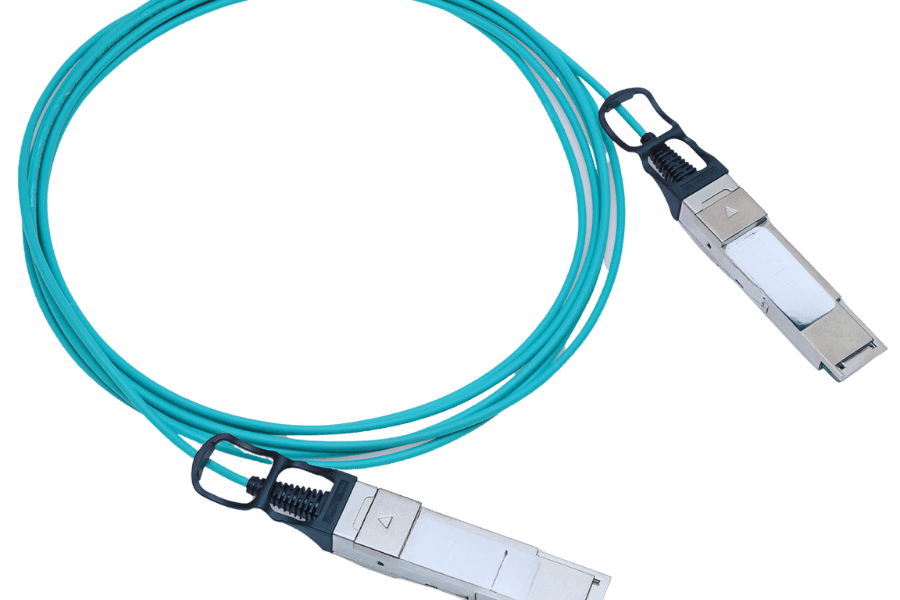

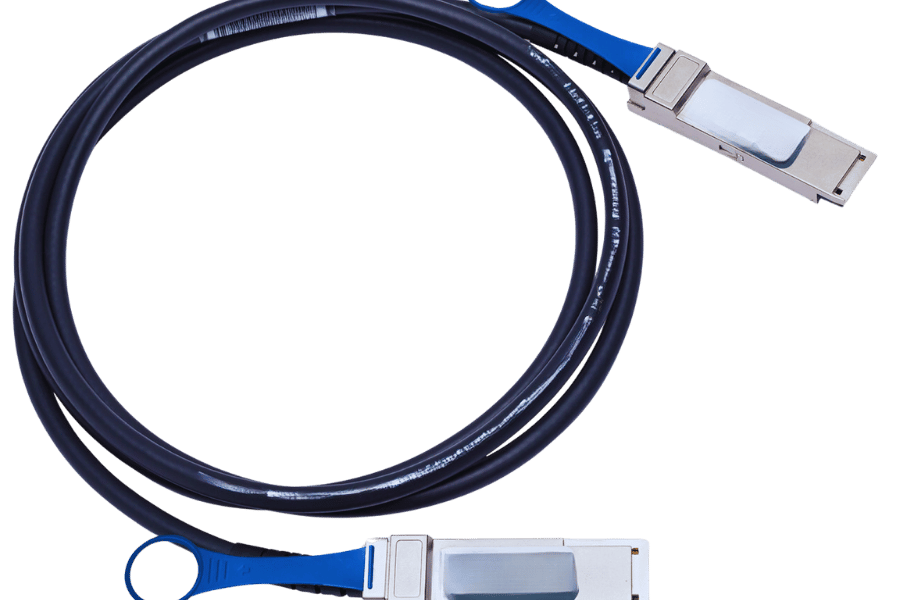

- Active Copper Cables (ACC): They are cheap and have signal conditioning for long-distance performance maintenance. ACCs work best in short-distance connections—usually within racks—and can transmit data at up to 100 Gbps speeds.

- Passive Copper Cables (PCC): These are cheaper than their active counterparts because they lack electronics. This makes them suitable only for shorter spans, generally around 5m-10m.

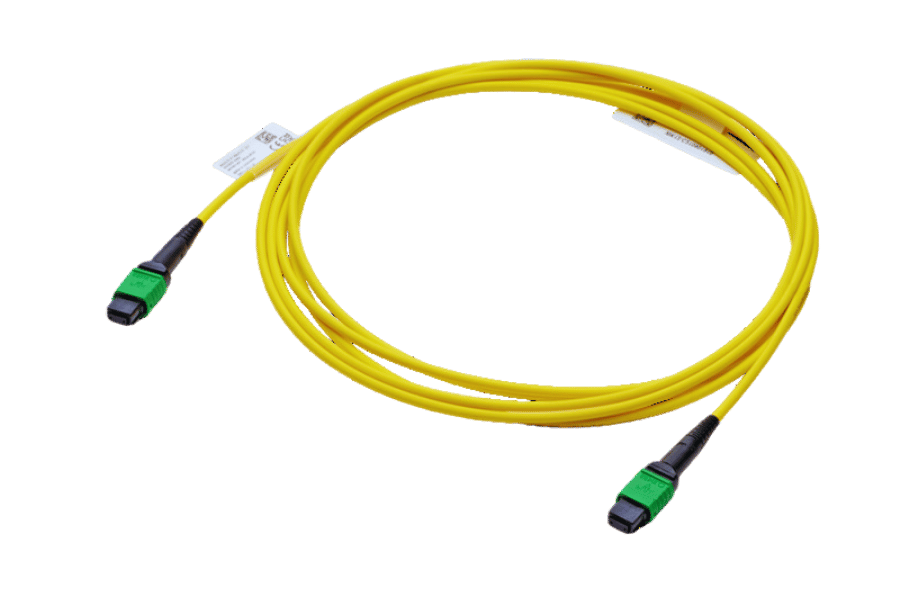

- Optical Fiber Cables use light signals for data transmission, offering the highest performance over longer distances among all three types. Additionally, multi-gigabit speeds can be achieved over several kilometers with them. This makes optical fibers ideal for interconnecting data centers or connecting different rows within a data hall, thus catering to environments with high bandwidth requirements and low latency demand.

Selections between these cable types depend on specific use cases, considering factors such as distance, cost, and performance required at each point.

What Are the Specifications of InfiniBand Cables?

InfiniBand Connection Standards

The InfiniBand Trade Association defines standards for InfiniBand connection, including several specifications to ensure high-speed data transfer compatibility and performance. Here are some of the most critical connection standards:

- Standards Of Data Rate: Different data rates are supported by InfiniBand, which includes but not limited to SDR (Single Data Rate) at 2.5 Gbps, DDR (Double Data Rate) at 5 Gbps, QDR (Quad Data Rate) at 10 Gbps, FDR (Fourteen Data Rate) at 14 Gbps, EDR (Enhanced Data Rate) at 25 Gbps, HDR (High Data Rate) at 50 Gbps and NDR (Next Data Rate) up-to 100Gbps. These improvements in increments allow better performance for modern high-performance computing applications.

- Form Factor Standards: QSFP connectors for optical or copper cables and standard form factors for different deployment environments ensure flexibility of connection types based on application requirements.

- Protocol Standards: IPoIB—IP over InfiniBand is one of the protocols governed by this association. It enables packet-based transmission with traditional RDMA protocols that allow efficient use of memory without CPU intervention, leading to reduced latency and, hence, increased throughput.

System architects and engineers who design or implement infinite networks within a data center environment should know these criteria to achieve the best results.

Data Rates and Bandwidth

Regarding InfiniBand, data rates define a network’s bandwidth capacity, determining its speed for transferring data and overall performance. According to findings made by top tech resources today, InfiniBand connections can provide bandwidths from 2.5 Gbps (SDR) up to an impressive 100 gigabits per second (NDR).

- Utilization of Bandwidth: Efficient bandwidth utilization is critical in high-performance computing environments. Throughput is maximized while latency is minimized by allowing multiple data streams to be handled simultaneously as designed by InfiniBand architecture.

- Scalable Nature: Scalability is yet another crucial aspect that should not be overlooked when it comes to Infiniband; this means that organizations can scale their ability to transfer information without having to redesign everything whenever newer standards such as HDR or NDR are adopted due to increasing complexities within systems and growing data needs.

- Applications in Reality: Infiniband’s robustness with regards to rate has been employed across various sectors that heavily rely upon the fast processing of large amounts of information, including scientific exploration, financial trading floors, and big data analytics centers where quick movement of facts is required together with real-time analysis capability fostered by these speeds thus indicating needfulness for higher level connection standards adoption performance requirements.

Understanding how much quicker an interconnection standard can pass bits through interconnected systems will help administrators optimize modern-day traffic management systems within their server farms using InfiniBand so as not only to save but also to ensure there is no wastage while still maintaining fast speeds across all links.

Shielding and Building Quality

The performance, durability, and ability to minimize interference of InfiniBand cables depend heavily on their shielding and build quality. Suitable shielding materials ensure data integrity security in places containing electromagnetic interference (EMI). Such cables are known to provide reliable protection against this type of interference as per the industry standards, thus making it possible for fast signals to stay steady even under challenging conditions.

Furthermore, the general construction quality of these connections also affects their reliability and lifespan, i.e., connector types, cable jacket toughness, and manufacturing precision, among others. Physical stability with minimal signal loss is achieved by using strong connectors, while jackets that withstand wear and tear plus environmental aspects do so by protecting them from abrasion, etcetera. Optimal network performance sustainability necessitates investing in superior-grade InfiniBand components that adequately support modern data-intensive application demands over time.

How Do InfiniBand NDR and HDR Differ?

Understanding InfiniBand NDR

InfiniBand NDR (Next Data Rate) is a massive advancement in the architecture of InfiniBand that has been created mainly to improve data rates and lower latency. It supports speeds of up to 400Gbps — a significant improvement over previous versions. Unlike HDR (High Data Rate), which tops at 200Gbps, NDR uses sophisticated encoding techniques that enable more efficient data transmission, doubling throughput without requiring additional physical infrastructure. Also included with these features are better link reliability and more robust error correction capabilities to guarantee the integrity of information throughout fast operations. This makes Infiniband NDR good for use in applications with high-performance needs while latencies such as artificial intelligence, machine learning, or HPC (High-Performance Computing) should be kept low. It’s essential, therefore, to understand these distinctions when optimizing network settings for I/O-intensive workloads.

What is InfiniBand HDR?

InfiniBand HDR (High Data Rate) is a data communication standard that can transfer data at up to 200 Gbps speeds. It is faster than older versions like EDR (Enhanced Data Rate), which provides 100 Gbps speed. HDR uses advanced signal processing and encoding techniques to achieve high throughput with low latency, thus best suited for applications that require large amounts of bandwidth, such as big data analytics, deep learning, or high-performance computing (HPC). Additionally, it is backward-compatible with former InfiniBand protocols to easily integrate into existing systems. This specification also introduces higher link reliability and lower error rates for more efficient and robust transmission in modern networking scenarios.

Comparing NDR and HDR

There are clear differences when InfiniBand NDR (Next Data Rate) is compared to HDR (High Data Rate). Most notably, NDR supports speeds of up to 400 Gbps, which is twice as fast as the maximum of 200 Gbps achieved with HDR. This additional capacity is essential for advanced applications relying on real-time analytics or extensive data processing.

NDR also uses more sophisticated signal integrity and error correction methods than HDR, thus providing better reliability. Although both protocols were created with low latency in mind, these improvements allow NDR to perform significantly better in environments where speed and accuracy are critical, such as those used by artificial intelligence or machine learning systems. Last but not least, while HDR maintains backward compatibility with previous InfiniBand technologies, it should be noted that upgrade planning organizations need to consider interoperability due to changes made by NDR for optimal performance within existing infrastructures.

What Are the Applications of InfiniBand Cables?

InfiniBand in Data Centers

Because it allows servers and storage systems to communicate with each other at high speeds with low latency, InfiniBand technology is an essential part of today’s data centers. It supports distributed computing applications and efficient data processing, making it perfect for use in high-performance computing (HPC) environments. For example, organizations can connect multiple nodes using this technology when working on artificial intelligence, deep learning, or scientific simulations.

Additionally, the scalability of InfiniBand is among its strong points, especially considering large-scale data centers where there may be a need for growth without necessarily overhauling infrastructure so much. Furthermore, RDMA, which stands for Remote Direct Memory Access, is another feature supported by the protocol that improves performance by allowing one computer to access memory on another directly without involving the operating system, thus reducing CPU overhead and speeding up application performance. As more and more data centers move towards cloud and edge computing models, there will be increased adoption of InfiniBand because it provides strong, reliable connectivity solutions necessary for such environments.

High-Performance Computing (HPC)

High-performance computing refers to using multiple computers to quickly process large amounts of data and perform complex simulations. It is used in many fields, such as science, engineering, and finance. To build HPC systems, you need strong processors and advanced techniques for parallel processing, allowing them to work much faster than standard PCs. Integrating InfiniBand technology into HPC environments is especially useful because it gives them a lot of bandwidth for communication between clusters’ nodes with negligible latency. This feature becomes essential when dealing with tasks that require substantial computational power, like climate modeling, molecular dynamics simulation, or machine learning on big data sets, among others. Moreover, continuous development in this area still brings better results: adding GPUs and other accelerators improves energy efficiency and performance, thus making high-performance computing an even more critical tool for future data-driven industry development.

Networking in Enterprise Environments

Networking is essential in business as it ensures effective communication, information sharing, and operational efficiency. Organizations use different networking technologies to interconnect devices, users, and applications. Local area networks (LANs), wide area networks (WANs), and cloud-based solutions are key components of enterprise networking that create a comprehensive infrastructure for realizing company objectives.

Adopting robust network management tools can improve traffic monitoring, troubleshooting, and security protocol maintenance. Software-defined networking (SDN) and network function virtualization (NFV) are among the technologies that allow businesses to respond faster to changes, thus providing elasticity and scalability. Additionally, secure architectures have become a significant concern for many enterprises due to advancing cyber threats where multiple layers of defense are put in place coupled with encryption protocols that safeguard private data. This means that good networking strategies enhance productivity and continuity planning against potential disruptions in the organization’s activities caused by such incidents.

How to Choose the Right InfiniBand Cable?

Passive vs Active Optical Cables

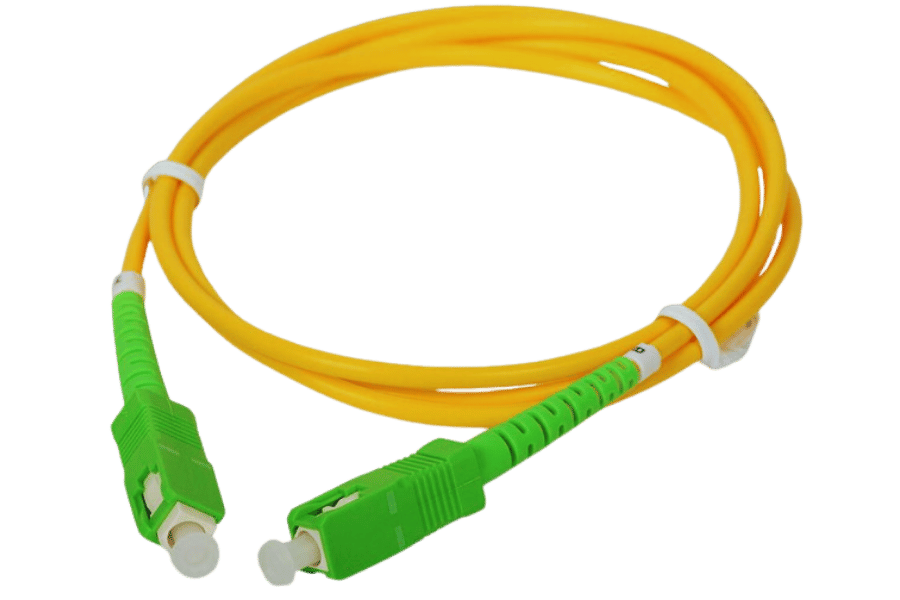

You need to know what each does best to pick the right one for your needs between passive and active optical cables. Passive optical cables don’t have any electronic parts; they use an optic fiber’s properties to transmit information. POCs are mostly used in short-distance connections due to their low cost and simplicity of manufacture. They are lightweight and flexible, which makes them ideal for areas with limited space.

On the other hand, active optical cables have integrated transceivers on both ends, enabling longer distances and better signal integrity over those lengths. This, therefore, means that they can be used in high-performance data centers or other environments where high bandwidths are needed over long distances, like between equipment racks and inside servers. Although AOCs may cost more than POCs, their advanced technology compensates for signal attenuation, thus improving performance.

In conclusion, whether one should choose between passive or active optical cables depends on several factors, including distance requirements, bandwidth needs as well as budget limitations, among others mentioned here, but not least, being careful not to ignore even a single factor about them because all such things play a vital role according to effectiveness towards network architecture adopted by different organizations around us today.

Connector Types: QSFP56, QSFP

The Quad Small Form-factor Pluggable (QSFP) connector series is designed for high-density data communication applications such as Ethernet and InfiniBand. The QSFP56 connector is an improvement of its predecessor, QSFP+, which supports up to 200 Gbps data rates. It uses four 50 Gbps channels to achieve this, which makes it strong enough even in extreme environments like high-speed data centers.

QSFP connectors are essential in efficient network design because they can work with different cabling technologies, i.e., copper or fiber optic cables. This means that QSFP56 can be used for enterprise networking, telecommunications, and high-performance computing, among others, giving businesses room for growth as their data needs increase. Power consumption, insertion loss, and overall network architecture should also be considered by organizations when choosing a connection type to achieve maximum performance and reliability.

Considering Other Factors Like Bend Radius and Shielding

While finding cabling solutions, remember to assess the bend radius and shielding properties for better network performance with time. The bend radius is the minimum radius at which a cable can be bent without any damage or change in its efficiency. Failure to follow this may cause signal loss, increased attenuation, or even complete cable breakdown, especially on optic fibers and high-speed copper cables. During installation, it is important to stick within the manufacturer’s given bend radii guidelines so as not to compromise system functionality.

Another important consideration is shielding, which protects against electromagnetic interference (EMI) and cross-talk, mainly on copper cables. Shielded cables can significantly improve signal integrity when used in environments with much electromagnetic noise. Choosing between an unshielded twisted pair (UTP) and a shielded twisted pair (STP) depends on operational environment specificities and expected interference levels. Therefore, going for products with adequate shields and optimal bend radius specifications will significantly help build reliable network infrastructure capable of sustaining higher data throughput rates while ensuring stable performance levels.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What are InfiniBand cables used for?

A: InfiniBand cable is employed in high-performance computing (HPC) environments to facilitate faster data transfer between servers, storage systems, and network devices such as routers and switches in supercomputers and data centers with low latency.

Q: How does InfiniBand compare to Ethernet?

A: Compared with Ethernet cables, InfiniBand typically has higher bandwidth and lower latency. While Ethernet has a wide range of enterprise applications, InfiniBand is designed specifically for environments requiring high-speed, low-latency communication, like advanced HPC systems.

Q: What types of InfiniBand cables are available?

A: There are different types of InfiniBand cables, including passive copper cables, direct-attached copper (DAC) cables, active copper cables, and fiber optic cables; they can also have different data rates, such as SDR, DDR, QDR, FDR, and EDR.

Q: What does ‘4x’ mean in InfiniBand cables?

A: The ‘4x’ represents the number of lanes or channels within the cable, which affects the rate at which data can be transferred. For instance, 4x will have more bandwidth than 1x.

Q: Where might I use 4x InfiniBand cables?

A: These are typically used in networking or data center scenarios where high performance and low latency are required, such as those found within supercomputers or other advanced HPC systems.

Q: What is InfiniBand FDR?

A: What does the acronym “InfiniBand FDR” stand for? It stands for Fourteen Data Rate and is a version of InfiniBand with data transfer rates up to 56 Gbps per 4x lane. Such a connection is used mainly in environments where high-speed transfers are needed.

Q: How does Infiniband EDR differ from FDR?

A: How does it differ from other types of InfiniBand? Enhanced Data Rate (EDR) has higher data transfer rates than Fourteen Data Rate (FDR). It can reach up to 100 Gbps per 4x lane, making it perfect for applications with maximum throughput and low-latency performance requirements.

Q: Are there any Infiniband cables that are compatible with PCI Express?

A: Can you connect InfiniBand cables to servers and storage devices through PCI Express? Yes, this can be done with the help of host bus adapters (HBAs) designed specifically for this purpose. These adapters allow high-speed data transfers within the computer.

Q: What are some advantages of using passive copper Infiniband cables?

A: Passive copper cables are cost-effective and easy to install. They provide reliable, low-latency connectivity options. However, their range is limited compared to active wires or fiber optic solutions.

Q: What does an InfiniBand transceiver do?

A: An InfiniBand transceiver converts electrical signals into optical ones and vice versa, enabling fast communication over longer distances using fiber optic connections. This component ensures top performance levels in data-intensive environments.